We Don't Know When We'll Get AGI (But It'll Be More Than Two Years)

Is superintelligence just around the corner, or is it decades away?

When ChatGPT vaulted into the public consciousness at the end of 2022, it was obvious to many observers that computers would soon become superintelligent. Seemingly overnight, AI had gone from laughably bad to frighteningly good. If we could make that much progress, that quickly, how long could it possibly take until machines became smarter than humans? The only question was whether they would remain our dutiful servants, or try to kill us before we have a chance to shut them off.

Silly as it may seem now, intellectual heavyweights from Yuval Noah Harari to Steve Wozniak rushed to sign a letter calling for a six-month pause in AI research. The implication was that potentially deadly computer intelligence was so close that it would be risky to wait even six months to see how things developed.

More recently, a group of futurologists led by Daniel Kokotajlo published an essay called AI 2027 laying out a detailed scenario for how superhuman AI could become a reality over the next 2-3 years. In 2021, Kokotajlo had made a bunch of mostly correct predictions about the shape of AI in 2026. So his updated forecast got a lot of press and attention. Spoiler: the AI gets superintelligent really fast, and decides to kill us before we have a chance to shut it off.

Unsurprisingly, people running AI companies are among the most optimistic about the advent of artificial general intelligence, or AGI. Cynics point out that they need to hype their wares to raise the huge sums required to train bigger and better models. A more generous take is that it’s human nature to “believe our own bullshit”. This is particularly true of entrepreneurs, who would never be willing to face the long odds of success if they weren’t exceptionally optimistic, even unrealistic, about their prospects.

And sure enough, Sam Altman, CEO of OpenAI, claimed in a 2024 blog post that “We are now confident we know how to build AGI as we have traditionally understood it.” Elon Musk even assigned it a timeline:

Meanwhile, calmer heads have started to argue that AGI might not be so easy. Maybe it wouldn’t appear for many years or even decades… if ever.

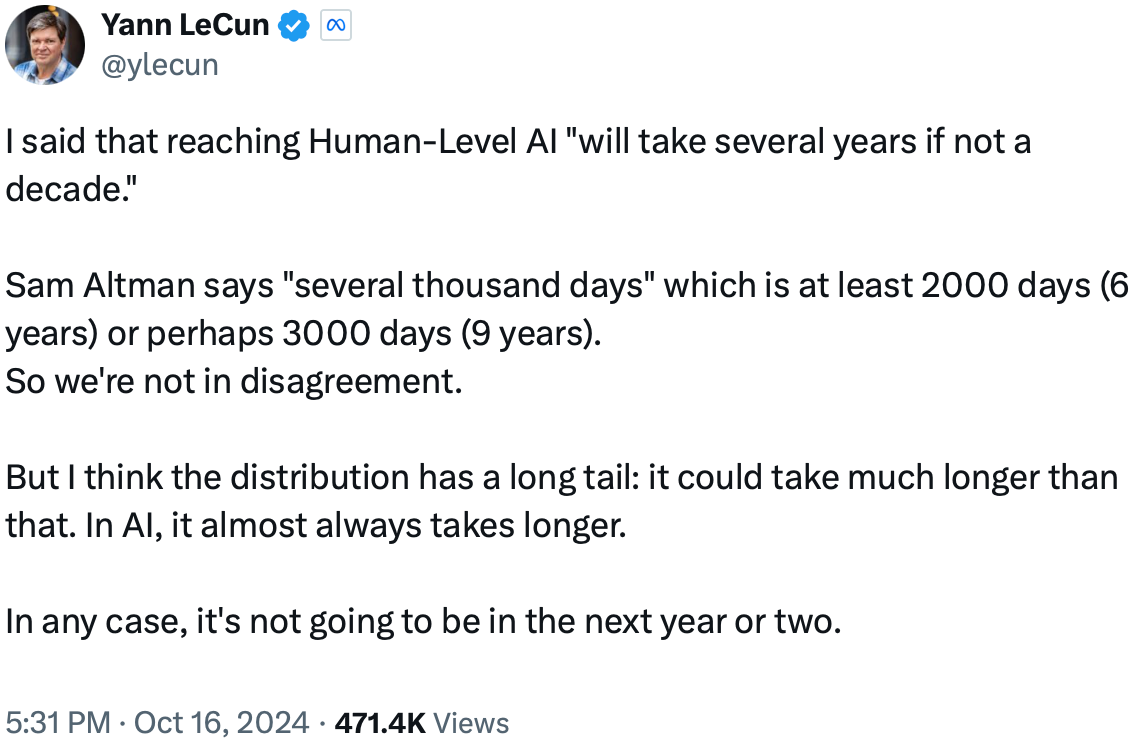

Meta’s Chief AI Scientist, Yann LeCun, is one of a number of prominent AI researchers who makes this point:

Another prominent skeptic is Gary Marcus, who has been adamant that large language models like ChatGPT are not the way to achieve AGI. Marcus has spent the past 25 years arguing that deep learning—which underpins pretty much all modern AI, including LLMs—will never lead to true machine intelligence. In a recent article, he points to research by Apple as proof that LLMs (and more recent “large reasoning models” aka LRMs) aren’t really thinking: they are just slicing and dicing the data they absorbed during training:

…even the latest of these new-fangled “reasoning models” still—even having scaled beyond o1—fail to reason beyond the distribution reliably, on a whole bunch of classic problems, like the Tower of Hanoi. For anyone hoping that “reasoning” or “inference time compute” would get LLMs back on track, and take away the pain of multiple failures at getting pure scaling to yield something worthy of the name GPT-5, this is bad news.

Meanwhile Rodney Brooks, another long-time AI pioneer, has been the most consistent voice pooh-poohing the idea that we’ll achieve AGI any time soon. Compared to him, Marcus is a relative newcomer to the debate; Brooks was already out there pushing back against the AI hype in 1988! Even then, he reminds us, inflated expectations about AI were decades old:

In his 1949 book GIANT BRAINS or Machines That Think, Edmund Berkeley reflected on the amazing capability of machines like ENIAC to perform 500 multiplications of two ten-digit numbers per second, and envisioned machines acting as automatic stenographers, translators, and psychiatrists.

Brooks has gone on to publish a lot of thoughtful analysis about why we always seem to believe that we are just about to achieve AGI, even though we aren’t. His 2017 blog post The Seven Deadly Sins of AI Predictions makes particularly worthwhile reading. (But if you do, read the edited version in the MIT Technology Review, if only for the whimsical illustrations by Joost Swarte.) He breaks down the cognitive and cultural traps that lead experts to constantly whiff on the AGI timeline—ranging from overgeneralizing narrow successes, to underestimating the complexity of the real world, to assuming straight-line progress.

So which is it? Will we get to AGI soon? Is it many years away? Or is it just a pipe dream?

A Quick Refresher Course on LLMs (and LRMs)

To answer these questions, it is helpful to have a bit of background on LLMs and deep learning in general. I won’t belabor the point since there are already a zillion LLM primers on the web: Andrej Karpathy’s amazing deep dive is a good choice if you have a few hours to spare.

With that said, ChatGPT—and all the ensuing buzz around AGI—was made possible by four key innovations:

Deep learning - all modern AI is based on layered neural networks where one set of neurons1 applies weights to some inputs and then feeds the new values into another layer of neurons. These networks are great at tasks like speech recognition and image classification, but they are hard to train efficiently.

Transformers - efforts to apply deep learning to natural language were hampered by the fact that feeding in data sequentially is really slow and cumbersome. It also makes it hard for the network to prioritize more recent text while still taking older text into account. Transformers, introduced in a 2017 paper by a group of Google researchers, use clever mathematical tricks that let the network efficiently take in large blocks of text all at once, while giving more weight to recent information.

Lots of digitalized data - the amazing performance of LLMs is, to a large degree, down to the unbelievable amount of text now available to us in digital form. This includes websites, as well as vast libraries of digitized books, video and podcast transcripts, and online forums like Reddit and Stack Overflow.

Ever more powerful GPUs - A few days ago, Nvidia became the first company to reach $4 trillion in market cap. The reason is simple: they got on the GPU train early, initially as a way to efficiently display 3D graphics. Then, as luck would have it, the same math used for 3D turned out to be key to training neural networks. By scaling up the chips they had been working on since 1999, they almost single-handedly provided the vast computational resources needed to create the first modern LLMs.2

So that’s how LLMs work: unimaginable amounts of text are fed into huge, deep neural networks that use transformers to make sense out of all that sequential data in a reasonably efficient way. The daunting computational complexity is tamed by the use of extremely powerful GPUs that are tailor-made to handle these types of calculations.

Once the limitations of the original LLMs became apparent, vendors like OpenAI (with o1), DeepSeek (with R1) and Anthropic (with Claude 3 Opus) came up with LRMs. Instead of generating the output text directly, the LLM first writes a kind of step-by-step plan. It then uses this plan to guide the generation process. LRMs are still LLMs at heart, but have proven much more capable at certain kinds of tasks like solving math problems or reasoning through multi-step logic puzzles.

AG-Aye or AG-Nay?

The more optimistic predictions about AGI all assume (explicitly or implicitly) that it will be powered by LLMs. It has taken decades to get to where we are today; any significantly different approach would almost certainly take more than a couple of years to bear fruit.

Sure enough, the most prominent contestants in the race to AGI have been focused on supercharging LLMs, mainly by training them with more data and more compute. Take Kokotajlo’s AI 2027, which tries to lay out a fictional path for getting to AGI in the next couple of years. It is no coincidence that the essay contains a lot of verbiage describing the bigger and more powerful data centers that will be created by the major players:3

GPT-4 required 2⋅1025 FLOP of compute to train. OpenBrain’s latest public model—Agent-0—was trained with 1027 FLOP. Once the new datacenters are up and running, they’ll be able to train a model with 1028 FLOP—a thousand times more than GPT-4. Other companies pour money into their own giant datacenters, hoping to keep pace.

In the real world, OpenAI has been following a similar path. When ChatGPT was first released, it used GPT-3 as its underlying model. A few months later, it moved onto GPT-4. While its exact parameter count—basically the size of the neural network—has not been disclosed, it is estimated to be about 10 times that of GPT-3. Among other things, this means that far more high quality data was needed to train it.

GPT-4 is way better than GPT-3, so naturally there was a lot of excitement about the potential of the next version: GPT-5. OpenAI didn’t do much to dampen expectations. In a speech at Stanford University in April 2024, Sam Altman made it clear he expected big things from future models:

GPT 5 is gonna be smarter than, a lot smarter than GPT 4, GPT 6 can be a lot smarter than GPT 5 and we are not near the top of this curve and we kind of know what to do and this is not like it's gonna get better in one area. This is not like we're gonna, you know, it's not that it's always gonna get better at this eval or this subject or this modality. It's just gonna be smarter in the general sense.

Last September, the President of OpenAI Japan predicted in a speech at a Japanese tech event that “GPT Next” would appear “soon” and would represent around a 100x performance improvement over GPT-4.

Then something funny happened. In December, reports started to appear about how OpenAI’s efforts to create their next big model had stalled. When they finally released the new model in February 2025, they named it GPT-4.5, rather than GPT-5, presumably to play down the hype and expectations. Developers were given access to the model, but only as a “preview”. And in April, just two months after it was released, OpenAI announced they were going remove developer access “in the coming months”.

In other words, the effort to push forward quickly to AGI by cramming more parameters, data and compute into existing LLM architectures had failed.

So does this mean that AGI skeptics like Gary Marcus and Rodney Brooks have been right all along? Yes and no.

Marcus and Brooks are both super smart guys who are experts in their fields. Nonetheless, their arguments can seem a bit dated and even downright curmudgeonly at times.

As deep learning has gone from strength to strength, culminating in the latest generation of LLMs and LRMs, Marcus’s arguments have taken on an increasingly shrill tone, as in this description of how the AI community reacted to his 2018 paper critical of deep learning:

The leaders of deep learning hated me for challenging their baby, and couldn’t tolerate any praise for the paper. When an influential economist Erik Brynjolfsson (then at MIT) complimented the article on Twitter, (“Thoughtful insights from @GaryMarcus on why deep learning won't get us all the way to artificial general intelligence”), Hinton’s long time associate Yann LeCun tried to contain the threat, immediately replying to Brynjolfsson publicly that the paper was “Thoughtful, perhaps. But mostly wrong nevertheless.”

Marcus is right that LLMs have begun to reach their limits, but seems unwilling to accept that deep learning has won because it has yielded incredible results, not because of some global conspiracy aimed at belittling his own (far less successful) efforts. And he regularly conflates the shortcomings of LLMs with those of deep learning in general when falsely accusing rivals like LeCun of secretly agreeing with (or even stealing) his ideas.

Brooks, meanwhile, has been banging the same downbeat drum since 2018 in his annual Predictions Scorecard. Overoptimistic prognoses about the future of AI have always been wrong in the past, he says, so they must be wrong now as well:

But this time it is different you say. This time it is really going to happen. You just don’t understand how powerful AI is now, you say. All the early predictions were clearly wrong and premature as the AI programs were clearly not as good as now and we had much less computation back then. This time it is all different and it is for sure now.

Yeah, well, I’ve got a Second Coming to sell you…

Brooks’s writing is intelligent and informed. It is worth reading, despite its occasionally smarmy and sarcastic tone. His skepticism about claims by AI proponents, informed by decades working in the field, is totally understandable.

But still, it is dangerous to assume that claims about some emerging technology must be wrong now since they were always wrong in the past. The same types of arguments were made about previous advances like electric lighting, airplanes and mobile phones. Consider the following statements, for example, made a few years—or even mere months—before the Wright Brothers’ first flight:

“Heavier-than-air flying machines are impossible.”4

- Lord Kelvin, President of the Royal Society, circa 1895“Aerial flight is one of that class of problems with which man will never be able to cope.”

- Simon Newcomb, astronomer and mathematician, October 1903“It might be assumed that the flying machine which will really fly might be evolved by the combined and continuous efforts of mathematicians and mechanicians in from one million to ten million years.”

- New York Times (editorial page), 1903

All new technologies are dismal failures… until they aren’t.

A more balanced view was expressed by Demis Hassabis, who leads AI research at Google, in a recent interview:

We’re seeing incredible gains with the existing techniques, pushing them to the limit. But we’re also inventing new things all the time as well. And I think to get all the way to something like AGI may require one or two more new breakthroughs.

It is becoming increasingly obvious to everyone involved that the initial hysteria around LLMs was overblown. They are incredibly useful tools and represent a huge step forward for AI, but they alone are not enough to achieve AGI. The addition of reasoning capabilities has made them a lot more useful, but recent research suggests that LRMs won’t get us to human-level intelligence either.

So we’re in search of “one or two more new breakthroughs”. Maybe they will arrive relatively quickly. But as LeCun said in the tweet quoted earlier, “the distribution has a long tail”. In plain English, this means that even top experts don’t really have a clue. Considering that we don’t even know what these breakthroughs might be, or how many will be needed, it is possible AGI won’t be reached for decades. Maybe never.

That is probably too pessimistic. But when you hear claims that superintelligence is just a year or two away, you can safely laugh them off.

Okay, they’re not really neurons, but they act in a way broadly analogous to the physical neurons in our brains.

They use made-up names to protect the innocent. It’s impossible to guess who they mean by “OpenBrain”.

This specific quote might be apocryphal, but it broadly represented academic sentiment at the time.