GPT-5 Didn't Live Up to the Hype—But Maybe That's a Good Thing

Instead of waiting for a magical superintelligence, let's focus on building AI-powered apps that bring immediate value to real users.

When OpenAI finally released GPT-5 last week, expectations were sky high. For years we have been told that LLMs are on an exponential growth trajectory. When ChatGPT was launched in 2022, the underlying model—GPT-3—was already two years old. So when GPT-4 was released a few months later, it was natural to perceive the jump as almost instantaneous1. When reasoning models like o1 appeared in 2024, their superior abilities cemented the view that LLM performance was improving at an accelerating pace.

The evidence, however, says otherwise. There haven’t really been any major advances in LLM performance since the first cohort of reasoning models. Nor is there an obvious vector for achieving exponential progress. Most of the recent innovations have been around LLMs as systems—automatic model selection, better web browsing capabilities, etc.—rather than improvements to the underlying models themselves.

For a while, it seemed like building bigger and bigger models trained on more and more data might be the way forward, but that didn’t work out so well:

Then something funny happened. In December, reports started to appear about how OpenAI’s efforts to create their next big model had stalled. When they finally released the new model in February 2025, they named it GPT-4.5, rather than GPT-5, presumably to play down the hype and expectations. Developers were given access to the model, but only as a “preview”. And in April, just two months after it was released, OpenAI announced they were going remove developer access “in the coming months”.

In other words, the effort to push forward quickly to AGI by cramming more parameters, data and compute into existing LLM architectures had failed.

Yet despite indications to the contrary, the idea that AI is about to achieve escape velocity has been hard to quash. Sam Altman is partly to blame, as he’s zigzagged between teasing the incredible capabilities of yet-to-be-released models and playing them down. Well-known pundits have promoted the idea that AGI is just a few years away. And there is a large and vocal community of AI proponents awaiting the arrival of superintelligence with almost religious fervor.

With expectations this inflated, it was inevitable that the GPT-5 would be a letdown. And it was. According to an op-ed in the Washington Post:

OpenAI’s latest chatbot model, GPT-5, is an improved artificial-intelligence tool: faster, more capable, more accurate. But it’s not the technomagic wand some AI optimists hoped for. The leap to “superintelligence,” the prize behind $400 billion in Big Tech investment this year, now looks later rather than sooner, if even possible.

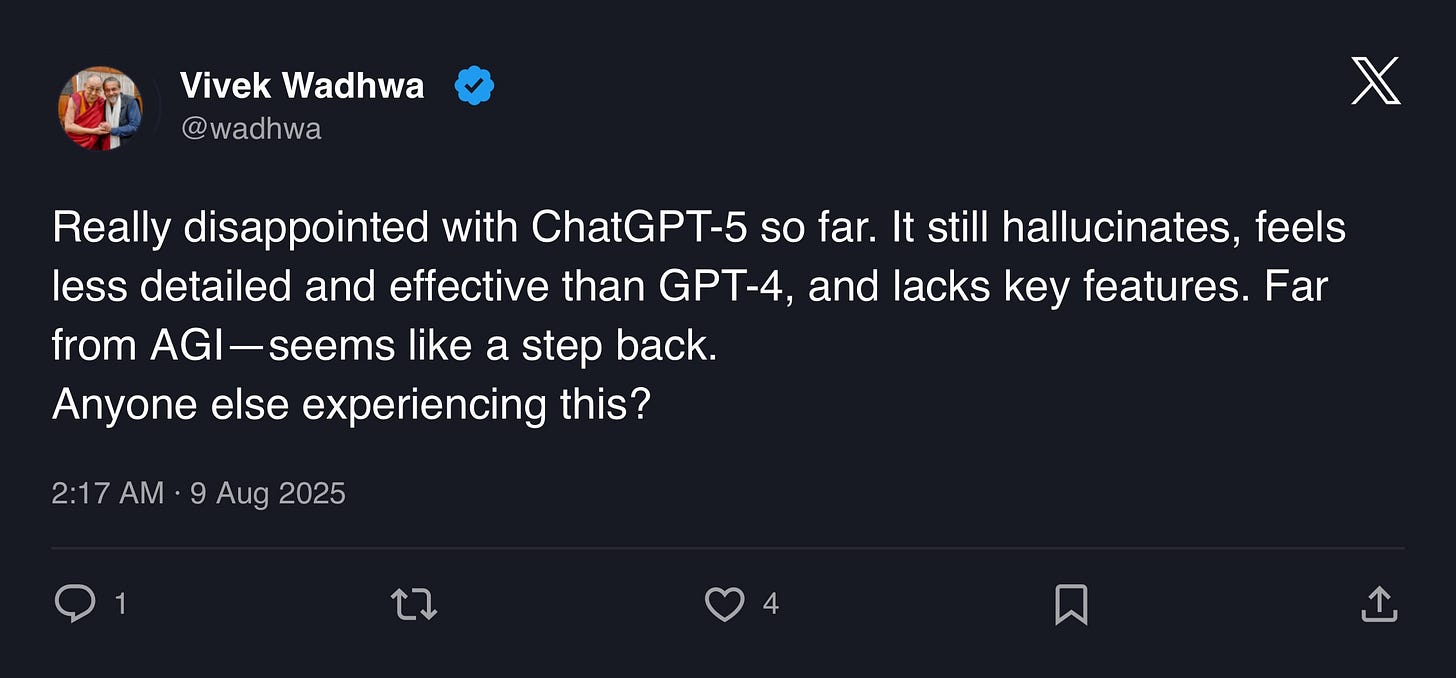

Some of the criticism boils down to people not liking stuff they’re not used to2. But there is also a palpable sense of disappointment among those who were hoping that GPT-5 would represent a giant step towards AGI:

All of this was predictable. Any hopes pinned on the impending singularity were driven more by motivated reasoning than any solid technical reasons.

It’s true that benchmark scores have improved steadily with each successive model release. At the same time, this may have more to do with AI vendors tuning their models specifically to perform well on popular tests, rather than improvement in the models’ ability to perform real-world tasks. Dean Valentine, CEO of AI cybersecurity startup ZeroPath, described how their experiences using newer models differed from what the benchmarks might suggest:

I have read the studies. I have seen the numbers. Maybe LLMs are becoming more fun to talk to, maybe they're performing better on controlled exams. But I would nevertheless like to submit, based off of internal benchmarks, and my own and colleagues' perceptions using these models, that whatever gains these companies are reporting to the public, they are not reflective of economic usefulness or generality. They are not reflective of my Lived Experience or the Lived Experience of my customers. In terms of being able to perform entirely new tasks, or larger proportions of users' intellectual labor, I don't think they have improved much since August.

So there is ample cause for skepticism about claims that models are achieving “exponential progress”, whether these are based on the results of public benchmarks or just wishful thinking.

While some may have been crestfallen to discover that GPT-5 was not the quantum leap they had been hoping for, from a practical perspective this may not be such a bad thing. After all, we should really be focused right now on producing better AI-powered software solutions that provide real value to real people. From the ZeroPath piece3:

Depending on your perspective, this is good news! Both for me personally, as someone trying to make money leveraging LLM capabilities while they're too stupid to solve the whole problem, and for people worried that a quick transition to an AI-controlled economy would present moral hazards.

As a fellow application developer leveraging AI to reimagine an existing software category4, I entirely share these sentiments. Since the initial release of ChatGPT, I have been convinced that we have rare opportunity to apply a new technology in a way that solves UX and functional challenges that would previously have been intractable. At the same time, I’ve been terrified of being caught in the vice-like grip of rapidly improving AI: on the one hand, forced to use technology that might not yet be up to the task; on the other hand, concerned that future versions might be so smart that they render all our efforts redundant.

Seen in this light, the lack of anything revolutionary in OpenAI’s latest release is a good thing. If we aren’t going to achieve AGI any time soon, we can shift our focus to the task of creating great AI-powered apps using current technology. New LLM versions with new capabilities are to be welcomed, even if they offer only incremental improvement. Some may lament that we are no closer to superintelligence than we were a week ago. To the extent that this serves as a wake-up call for the software industry, however, that might not be such a bad outcome.

The gap between GPT-3 and GPT-4 was actually almost three years.

This is probably just the price of success for companies with a large and devoted user base. Facebook, for example, has suffered regular backlashes over the years, only for users to later embrace the changes that initially enraged them.

It’s a great post by the way, very much worth reading in full.

In our case 3D product configurators.

I agree with your premise, nice write up. I do have a question to dig into your thinking:

Yes, GPT-5 isn't much of a leap from o3 (slightly better on benchmarks and cheaper?). However, if the only model you had experience with was the original GPT-4, the one people were using to rewrite the Declaration of Independence in the style of Snoop Dogg in early 2023, wouldn't GPT-5-Thinking seem like a fairly shocking upgrade in capability?

It's only been 2.5 years since GPT-4 was released and we've covered a lot of capability ground in that time. The incremental releases we've seen since them has led to a 'frog in boiling water' effect, which OpenAI has said is their intent: many small incremental upgrades will give society a better change at adapting.

All that said, I do agree that GPT-5's overall capabilities make me more pessimistic that we will see somethin akin to AGI in the next ~2-3 years.